Fresh papers for the weekend: NeuroAI roundup #1

Short summaries of NeuroAI papers to fuel your weekend reads

Before we get to the papers, quick announcement: my institution, Mila, is hosting a 4-day NeuroAI workshop in October right here in Montreal. Confirmed speakers include Yoshua Bengio, Alison Gopnik, Paul Cisek, Adrienne Fairhall, and many more. It should be awesome! More info and registration here.

Self-supervised video pretraining yields human-aligned visual representations

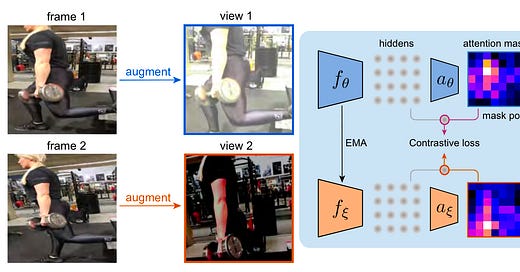

Self-supervised video pretraining yields human-aligned visual representations. This paper trains a ResNet with contrastive learning on video datasets, finding that the representations are more human-aligned than ones trained on images. The key ingredients are 1) curating a dataset of videos that is aligned to conventional ImageNet 2) an attention-based, multi-scale pooling mechanism. The demo human alignment with 1) attention maps and 2) error consistency with humans on OOD images. Nice!

Their second set of comparisons is a bit weak, they seem to have cherry picked 4 out of the 19 Geirhos et al. (2021) tasks rather than the whole set. Maybe they ran out of time/space? I'd like to see a full comparison to see if it holds up. Nevertheless, very exciting.

Distinct roles of putative excitatory and inhibitory neurons in the macaque inferior temporal cortex in core object recognition behavior

This is an interesting idea from Kohitij Kar’s group: comparing deep recurrent CNNs to different subclasses of neurons in visual cortex. With the kinds of extracellular recordings they use, they can't directly tell whether spikes are from excitatory cells or inhibitory cells, but they can make an educated guess based on the shape of the waveform (thin spikes: inhibitory, broad spikes: excitatory). They find a better match between excitatory cells and CNNs. Would love to see a follow-up where they add a true inhibitory compartment in the CNN to see if they can match the full diversity of IT.

A contrastive coding account of category selectivity in the ventral visual stream

Cool paper from Talia Konkle's group on the emergence of brain-like category selectivity in DNNs. They find that deep neural networks trained with self-supervision self-organize into functional modules that match those of the brain – faces, bodies, places, words. That depends on both using the right objective function and presenting the right visual diet.

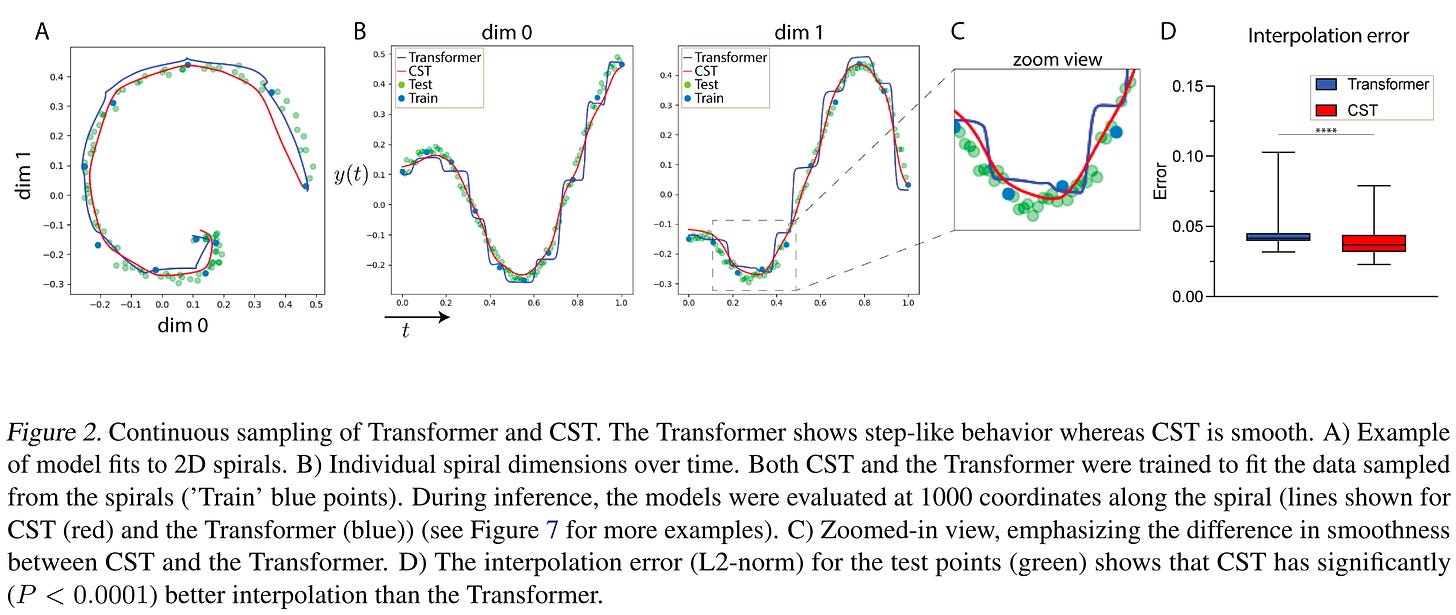

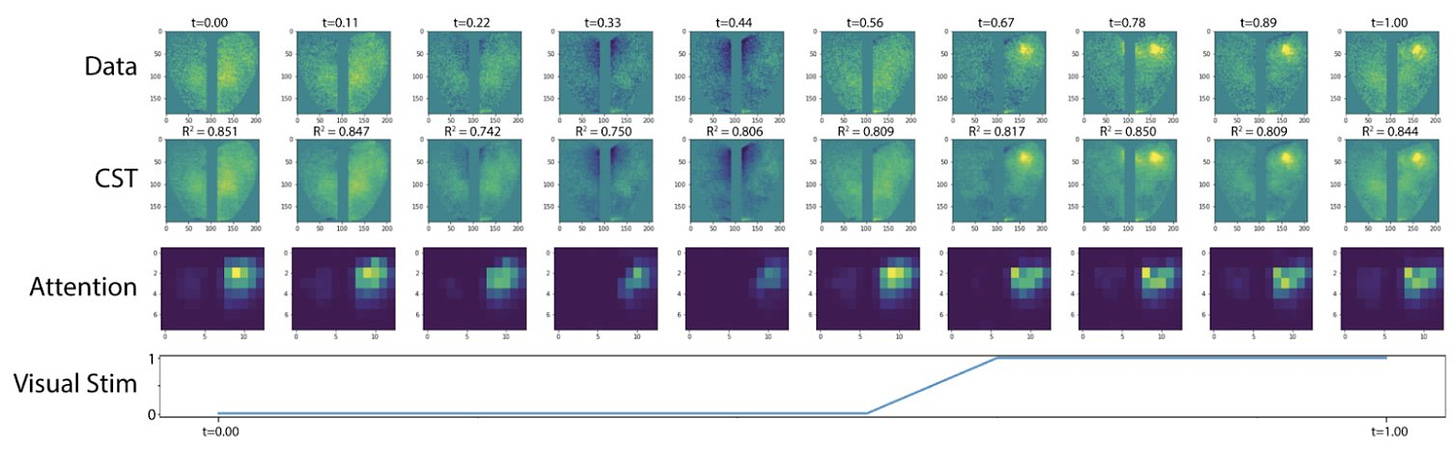

Continuous Spatiotemporal Transformer

Not traditional NeuroAI, but an interesting ML innovation with a use case for neuro data. They create a continuous formulation of transformers in Sobolev space that’s able to smoothly interpolate missing data points. From my read of these lecture notes from Robert Tibshirani, this is conceptually similar to mashing up a thin spline with a transformer. Hence, you get interpolations which are, in some sense, maximally smooth given the data. They show that this is useful for smoothing and denoising calcium data. Slick!

A pair of papers on high-performance speech synthesis in paralyzed patients [1, 2]

Hot of the press (Aug 23rd), we have two papers in Nature that demonstrate speech synthesis from signals recorded in the brain. This is nuts! There are tens of thousands of people in the US who are lucid, yet unable to communicate with the outside world because of brain disease or trauma, for example, because of ALS or brainstem stroke. Think Stephen Hawking or The Diving Bell and the Butterfly. Both groups demo about the same error rate and decoding throughput, on the order of 80 words a second and 25% word error rate. To put this in context, the previous BCI speed record was 90 characters a minute and ~6% character error rate in the context of handwriting. It’s hard to do an apples-to-apples comparison because the error rates are so different but given the redundancy in speech and contextual cues, I think this would translate to significantly higher intelligible throughput.

The two papers came out on the same day; they must have landed on the editor’s desk at about the same time. Here’s a quick rundown comparing the papers:

Teams: one team from Stanford, led by Jamie Henderson and the late Krishna Shenoy; another team from UCSF, led by Eddie Chang.

Recording tech: Stanford continues the tradition of using Utah arrays with penetrating electrodes, 4 of them, 2 in premotor and 2 in Broca’s area (384 electrodes total); UCSF uses a superficial ECoG array over a larger surface over the superior temporal gyrus (253 electrodes).

Metrics: Stanford reports 23.8% word error rate decoded at 62 words per minute over 125,000 word vocabulary. UCSD reports 78 words per minute and median word error rate of 25% over 1,000 word vocabulary

NLP/AI: Stanford’s is fairly old school with an RNN that maps the electrode recordings to phonemes, training with CTC (connectionist temporal classification). The language model is a trigram. UCSF’s is, as far as I can tell, very similar. Why so old school? These experiments take forever to run. Likely, communication with the FDA must have started in the late 2010’s. True neuroscience waits.

Unique findings: on the Stanford side, they found that they couldn’t decode anything from Broca’s area, an area in the brain that has been identified over 150 years as THE brain area. On the UCSF side, they pushed the envelope is in adding an avatar. I think this will help comprehension, especially in light of the McGurk effect.

I’ve been following this field for a while; at Facebook, while I was working on silent speech decoding, we funded some earlier work from Eddie Chang and interacted with the late Krishna Shenoy. It’s amazing the strides the field has made in the last 6 years. It may be the case that there’s a hump in BCI, where there’s a minimal threshold functionality to make it appeal to a large audience. I think we’re getting close to that.