Consciousness in Artificial Intelligence: Insights from the Science of Consciousness

I was super excited to see this paper roll by from folks at Mila (Eric, Yoshua, Xu) and fellow Neuromatchers (Grace & Megan). It’s 88 pages long, but it’s quite approachable and I was able to finish in a couple of sessions.

The authors present what is perhaps a controversial view of consciousness & AI: that it can be assessed, that you can make a grid to score current AI systems based on theories of consciousness, and that current AI systems don’t check all the boxes but all the building blocks exist today.

The authors really hone in on the soft problem of consciousness: how do you replicate the computational and operational aspects of consciousness? If you replicate all of these aspects, then if you believe in computational functionalism, you solve the “hard problem of consciousness”: why does it feel like anything to be? Computational functionalism is like duck typing for consciousness: if it walks like consciousness, and talks like consciousness, it’s consciousness. It doesn’t matter whether it’s in silico or in vivo. I highly recommend

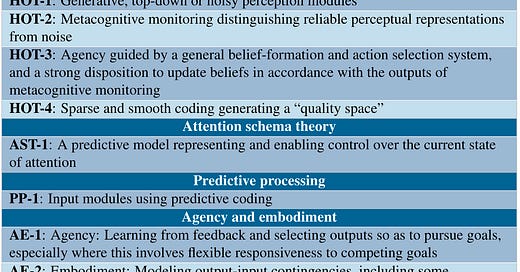

‘s recent piece to contextualize these conceptions of consciousness.What follows is then a grand tour of mechanistic theories of consciousness which are compatible with computational functionalism—global workspace theory, higher-order theory, attention schema theory, etc. Notably, this excludes Integrated Information Theory (IIT), which is substrate-dependent. From these theories, they establish a grid of indicator properties: if you hit all these criteria, your confidence that an agent has the working properties of consciousness increases. To be clear, however, the criteria listed are neither said to be necessary nor sufficient: they’re more like a laundry list of commonly assumed properties about consciousness. Some of the common themes include that:

A conscious agent is embodied, has recurrent processing, attention, a global workspace and higher-order processing. It uses sparse, disentangled representations to build models of the world.

Each of these factors can be instantiated in systems that exist today, but they argue that all the pieces haven’t been put together. I wasn’t entirely convinced by the argument that the pieces haven’t all been put together. They argue, in particular, that a reinforcement learning agent built with transformers doesn’t check all these criteria. But interestingly, the thing that disqualifies is slightly outside of the grid itself:

So the system arguably imitates planning and using visuomotor control to execute plans, as opposed to actually doing these things. (emphasis mine)

That seems to me a distinction grounded in the centrality of amortized inference in conscious agents, which is outside the grid. Earlier, they also argue that transformers can’t hit all the criteria, because they’re not recurrent. You could argue that transformers are a superset of finite-horizon, unrolled recurrent nets; disallowing them to be lumped in with recurrent processing seems to run counter to computational functionalism (see, e.g. Doerig et al. 2019). In other words, while I’m convinced that it doesn’t feel like anything to be PaLM-E, the core reasons to make that statement lie outside of the grid they propose.

Nevertheless, a thought-provoking read, and a good companion and counter-weight to Erik Hoel‘s recent book dealing with a theory of consciousness not compatible with computational functionalism, Integrated Information Theory (IIT).

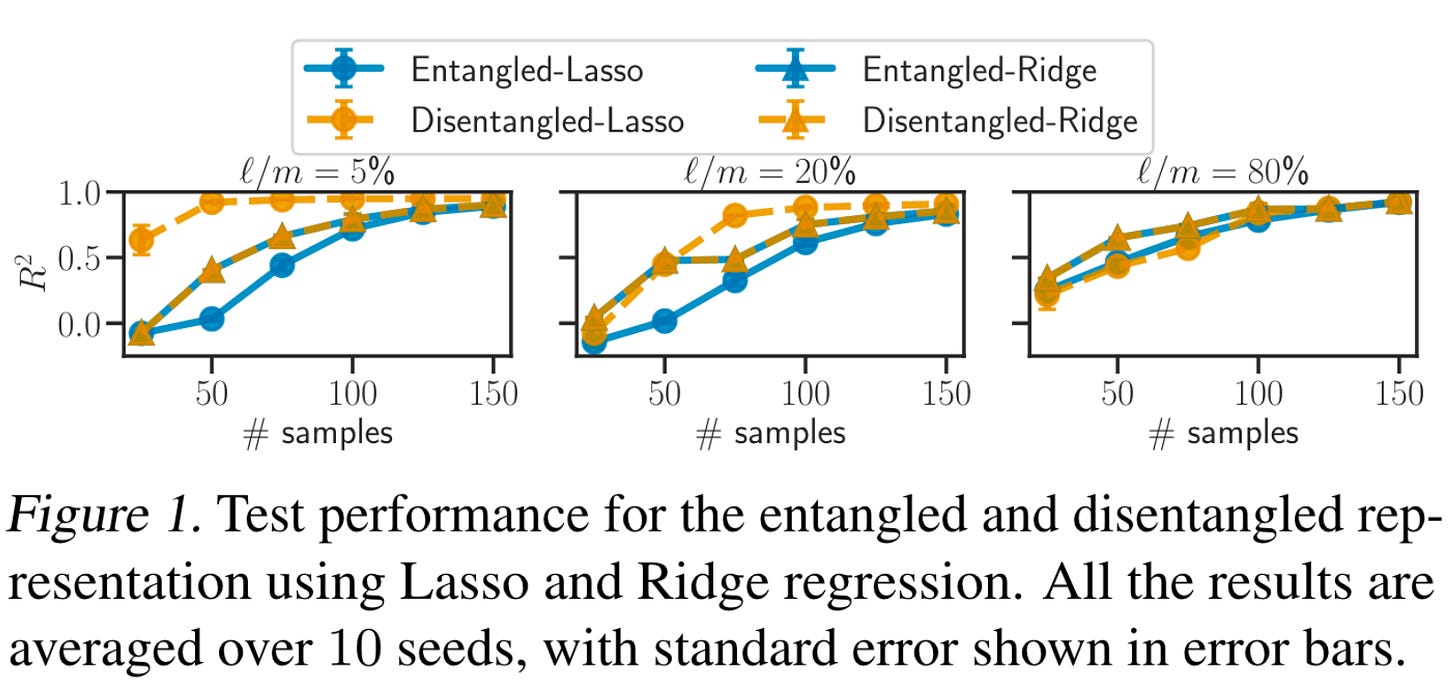

Synergies between Disentanglement and Sparsity: Generalization and Identifiability in Multi-Task Learning

The last few years have seen a rise of methods that find dense features in large-scale datasets, and then use these features for transfer learning, whether it’s vision transformers or large language models. This is in contradistinction to the classic methods of sparse feature learning: sparse coding, dictionary learning, and non-negative matrix factorization. The authors find a fairly general result on the identifiability of sparse hidden factors in deep models in multi-task and meta-learning settings. They propose a straightforward dual-loop algorithm to learn latent features and their sparse loadings and show an advantage of these sparse representations in terms of sample efficiency.

My NeuroAI take: There’s a long history of sparse coding as a model of the brain. Neuroscientists made strong claims that sparse codes were, in some sense, better than dense codes. Yet, your average transformer works pretty darn well with a dense representation. What gives? Perhaps sparse representations are more useful in certain regimes, for example when examples are few. It would be interesting to link these results to the Baldwin effect on evolutionary timescales: perhaps there is evolutionary pressure towards sparsity because it can make learning more efficient.

MyoSuite

Not a paper but a piece of software that’s highly relevant to this crowd. Guillaume Durandau, who became a prof in mechanical engineering at McGill this February, came to showcase MyoSuite during the Mila NeuroAI reading group. MyoSuite is a very slick software suite capable of simulating muscles and skeletons of the forearm as well as legs in MuJoCo. He made an impassioned pitch that this kind of simulation software could serve as a basis for a new form of translational NeuroAI: with good models of sensorimotor cortex, the spinal cord, and skeletomuscular mechanics, we could build new therapies for those affected by disorders of movement. The key idea is to use these models to work our way backwards to find optimal stimulation patterns. It’s a very exciting research programme.

MyoSuite is hosting the MyoChallenge at this year’s NeurIPS, where you learn to control a simulated human skeleton for fine forearm movement or locomotion. 20k$+ in prizes! There’s a month until the deadline, so get in there!

Contrasting action and posture coding with hierarchical deep neural network models of proprioception

The MyoSuite talk reminded me of this paper I had put in my stack for a while from the Alexander Mathis lab looking at how the brain learns representations in somatosensory cortex. Using a model of spindles and a skeletomuscular model of the arm, they generated action sequences corresponding to letters. They then trained a neural network on either recognizing the letter that was traced or decoding the trajectory. They found that letter recognition leads to representations that look more like those you would find in single neurons in somatosensory cortex.

My first thought reading this is that this is incredibly cool work: simulations! NeuroAI! Systems that people haven’t looked at from this lens! My second thought was: wait, what? I’m sympathetic to the idea that you can use efference copy to learn good representations in the brain, but this feels like a bit of a stretch. One thing that feels fishy is that you can have fine motor skills without much centralized planning; e.g. think of the octopus. Indeed, the reviews published in eLife, including one from Niko Kriegeskorte, seem to converge on these points: cool idea, great execution, I don’t buy the conclusion. Definitely an interesting new research programme.

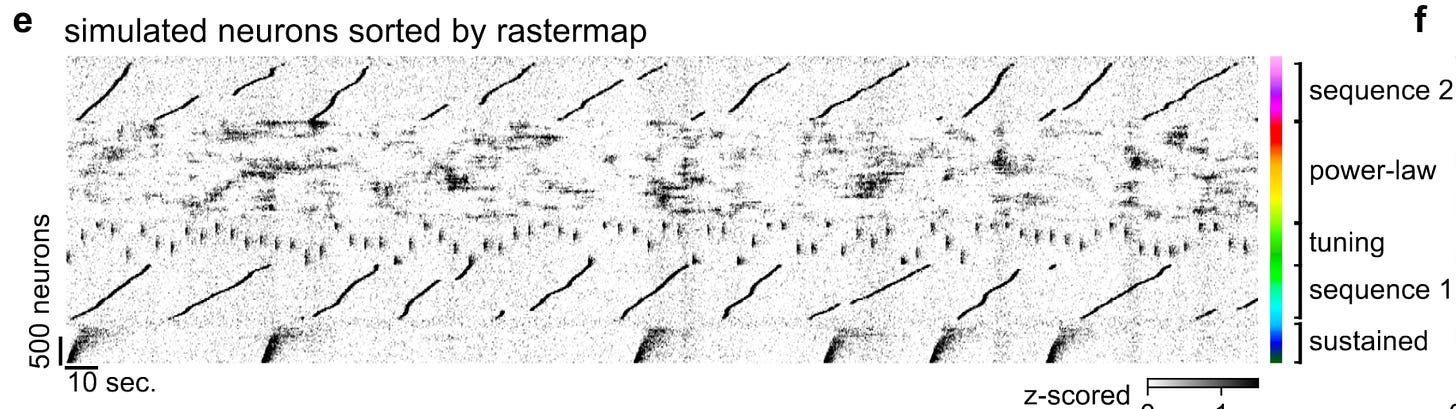

Rastermap: a discovery method for neural population recordings

From Carsen Stringer and Marius Patchitariu (friends from the NMA year 1 days) comes this cool method to sort spiking activity in massively parallel recordings like Neuropixels. You can view it as a combination of k-means and a (semi-)exhaustive search to minimize a mixed local and global loss on groups. It does a really good job on real spiking data, but perhaps most intriguingly, it appears to work very well on ANN activity, as illustrated by this analysis of the population activity in RL networks trained on Atari games. One more tool in the belt of mechanistic interpretability?

Send me your paper!

They say that you’re the average of your 5 closest friends; I certainly had the impression this week that my readings were heavily weighted towards papers 1) authored by people at Mila and 2) from people I met through Neuromatch Academy. If you’re outside of that bubble, and you think your paper should be on my radar, comment or send me an email!